Larry Wasserman has started the Normal Deviate blog which I added to the blogroll on the right.

Manfred Warmuth points out the UCSC machine learning summer school running July 9-20 which may be of particular interest to those in silicon valley.

Machine learning and learning theory research

Larry Wasserman has started the Normal Deviate blog which I added to the blogroll on the right.

Manfred Warmuth points out the UCSC machine learning summer school running July 9-20 which may be of particular interest to those in silicon valley.

People are naturally interested in slicing the ICML acceptance statistics in various ways. Here’s a rundown for the top categories.

| 18/66 = 0.27 | in (0.18,0.36) | Reinforcement Learning |

| 10/52 = 0.19 | in (0.17,0.37) | Supervised Learning |

| 9/51 = 0.18 | not in (0.18, 0.37) | Clustering |

| 12/46 = 0.26 | in (0.17, 0.37) | Kernel Methods |

| 11/40 = 0.28 | in (0.15, 0.4) | Optimization Algorithms |

| 8/33 = 0.24 | in (0.15, 0.39) | Learning Theory |

| 14/33 = 0.42 | not in (0.15, 0.39) | Graphical Models |

| 10/32 = 0.31 | in (0.15, 0.41) | Applications (+5 invited) |

| 8/29 = 0.28 | in (0.14, 0.41]) | Probabilistic Models |

| 13/29 = 0.45 | not in (0.14, 0.41) | NN & Deep Learning |

| 8/26 = 0.31 | in (0.12, 0.42) | Transfer and Multi-Task Learning |

| 13/25 = 0.52 | not in (0.12, 0.44) | Online Learning |

| 5/25 = 0.20 | in (0.12, 0.44) | Active Learning |

| 6/22 = 0.27 | in (0.14, 0.41) | Semi-Supervised Learning |

| 7/20 = 0.35 | in (0.1, 0.45) | Statistical Methods |

| 4/20 = 0.20 | in (0.1, 0.45) | Sparsity and Compressed Sensing |

| 1/19 = 0.05 | not in (0.11, 0.42) | Ensemble Methods |

| 5/18 = 0.28 | in (0.11, 0.44) | Structured Output Prediction |

| 4/18 = 0.22 | in (0.11, 0.44) | Recommendation and Matrix Factorization |

| 7/18 = 0.39 | in (0.11, 0.44) | Latent-Variable Models and Topic Models |

| 1/17 = 0.06 | not in (0.12, 0.47) | Graph-Based Learning Methods |

| 5/16 = 0.31 | in (0.13, 0.44) | Nonparametric Bayesian Inference |

| 3/15 = 0.20 | in (0.7, 0.47) | Unsupervised Learning and Outlier Detection |

| 7/12 = 0.58 | not in (0.08, 0.50) | Gaussian Processes |

| 5/11 = 0.45 | not in (0.09, 0.45) | Ranking and Preference Learning |

| 2/11 = 0.18 | in (0.09, 0.45) | Large-Scale Learning |

| 0/9 = 0.00 | in [0, 0.56) | Vision |

| 3/9 = 0.33 | in [0, 0.56) | Social Network Analysis |

| 0/9 = 0.00 | in [0, 0.56) | Multi-agent & Cooperative Learning |

| 2/9 = 0.22 | in [0, 0.56) | Manifold Learning |

| 4/8 = 0.50 | not in [0, 0.5) | Time-Series Analysis |

| 2/8 = 0.25 | in [0, 0.5] | Large-Margin Methods |

| 2/8 = 0.25 | in [0, 0.5] | Cost Sensitive Learning |

| 2/7 = 0.29 | in [0, 0.57) | Recommender Systems |

| 3/7 = 0.43 | in [0, 0.57) | Privacy, Anonymity, and Security |

| 0/7 = 0.00 | in [0, 0.57) | Neural Networks |

| 0/7 = 0.00 | in [0, 0.57) | Empirical Insights |

| 0/7 = 0.00 | in [0, 0.57) | Bioinformatics |

| 1/6 = 0.17 | in [0, 0.5) | Information Retrieval |

| 2/6 = 0.33 | in [0, 0.5) | Evaluation Methodology |

Update: See Brendan’s graph for a visualization.

I usually find these numbers hard to interpret. At the grossest level, all areas have significant selection. At a finer level, one way to add further interpretation is to pretend that the acceptance rate of all papers is 0.27, then compute a 5% lower tail and a 5% upper tail. With 40 categories, we expect to have about 4 violations of tail inequalities. Instead, we have 9, so there is some evidence that individual areas are particularly hot or cold. In particular, the hot topics are Graphical models, Neural Networks and Deep Learning, Online Learning, Gaussian Processes, Ranking and Preference Learning, and Time Series Analysis. The cold topics are Clustering, Ensemble Methods, and Graph-Based Learning Methods.

We also experimented with AIStats resubmits (3/4 accepted) and NFP papers (4/7 accepted) but the numbers were to small to read anything significant.

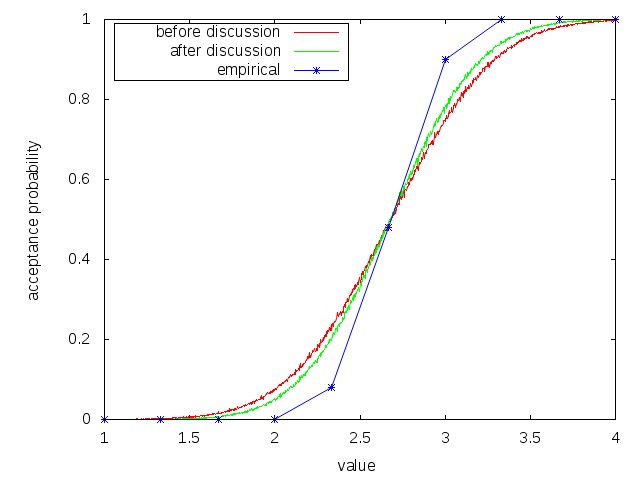

One thing that surprised me was how uniform decisions were as a function of average score in reviews. All reviews included a decision from {Strong Reject, Weak Reject, Weak Accept, Strong Accept}. These were mapped to numbers in the range {1,2,3,4}. In essence, average review score < 2.2 meant 0% chance of acceptance, and average review score > 3.1 meant acceptance. Due to discretization in the number of reviewers and review scores there were only 3 typical uncertain outcomes:

One question I’ve always wondered is: How much variance is there in the accept/reject decision? In general, correlated assignment of reviewers can greatly increase the amount of variance, so one of our goals this year was doing as independent an assignment as possible. If you accept that as independence, we essentially get 3 samples for each paper where the average standard deviation of reviewer scores before author feedback and discussion is 0.64. After author feedback and discussion the standard deviation drops to 0.51. If we pretend that papers have an intrinsic value between 1 and 4 then think of reviews as discretized gaussian measurements fed through the above decision criteria, we get the following:

There are great caveats to this picture. For example, treating the AC’s decision as random conditioned on the reviewer average is a worst-case analysis. The reality is that ACs are removing noise from the few events that I monitored carefully, although it is difficult to quantify this. Similarly, treating the reviews observed after discussion as independent is clearly flawed. A reasonable way to look at it is: author feedback and discussion get us about 1/3 or 1/4 of the way to the final decision from the initial reviews.

Conditioned on the papers, discussion, author feedback and reviews, AC’s are pretty uniform in their decisions with ~30 papers where ACs disagreed on the accept/reject decision. For half of those, the ACs discussed further and agreed, leaving Joelle and I a feasible quantity of cases to look at (plus several other exceptions).

At the outset, we promised a zero-spof reviewing process. We actually aimed higher: at least 3 people needed to make a wrong decision for the ICML 2012 reviewing process to kick out a wrong decision. I expect this happened a few times given the overall level of quality disagreement and quantities involved, but hopefully we managed to reduce the noise appreciably.

The accepted papers are up in full detail. We are still struggling with the precise program itself, but that’s coming along. Also note the May 13 deadline for early registration and room booking.

Yahoo! laid off people. Unlike every previous time there have been layoffs, this is serious for Yahoo! Research.

We had advanced warning from Prabhakar through the simple act of leaving. Yahoo! Research was a world class organization that Prabhakar recruited much of personally, so it is deeply implausible that he would spontaneously decide to leave. My first thought when I saw the news was “Uhoh, Rob said that he knew it was serious when the head of ATnT Research left.” In this case it was even more significant, because Prabhakar recruited me on the premise that Y!R was an experiment in how research should be done: via a combination of high quality people and high engagement with the company. Prabhakar’s departure is a clear end to that experiment.

The result is ambiguous from a business perspective. Y!R clearly was not capable of saving the company from its illnesses. I’m not privy to the internal accounting of impact and this is the kind of subject where there can easily be great disagreement. Even so, there were several strong direct impacts coming from the machine learning, economics, and algorithms groups.

Y!R clearly was excellent from an academic research perspective. On a per person basis in relevant subjects, it was outstanding. One way to measure this is by noticing that both ICML and KDD had (co)program chairs from Y!R. It turns out that talking to the rest of the organization doing consulting, architecting, and prototyping on a minority basis helps research by sharpening the questions you ask more than it hinders by taking up time. The decision to participate in this experiment was a good one for me personally.

It has been clear in silicon valley, academia, and pretty much everywhere else that people at Yahoo! including Yahoo! Research have been looking around for new positions. Maintaining the excellence of Y!R in a company that has been under prolonged stress was challenging leadership-wise. Consequently, the abrupt departure of Prabhakar and an apparent lack of appreciation by the new CEO created a crisis of confidence. Many people who were sitting on strong offers quickly left, and everyone else started looking around.

In this situation, my first concern was for colleagues, both in Machine Learning across the company and the Yahoo! Research New York office.

Machine Learning turns out to be a very hot technology. Every company and government in the world is drowning in data, and Machine Learning is the prime tool for actually using it to do interesting things. More generally, the demand for high quality seasoned machine learning researchers across startups, mature companies, government labs, and academia has been astonishing, and I expect the outcome to reflect that. This is remarkably different from the cuts that hit ATnT research in late 2001 and early 2002 where the famous machine learning group there took many months to disperse to new positions.

In the New York office, we investigated many possibilities hard enough that it became a news story. While that article is wrong in specifics (we ended up not fired for example, although it is difficult to discern cause and effect), we certainly shook the job tree very hard to see what would fall out. To my surprise, amongst all the companies we investigated, Microsoft had a uniquely sufficient agility, breadth of interest, and technical culture, enabling them to make offers that I and a significant fraction of the Y!R-NY lab could not resist. My belief is that the new Microsoft Research New York City lab will become an even greater techhouse than Y!R-NY. At a personal level, it is deeply flattering that they have chosen to create a lab for us on short notice. I will certainly do my part chasing the greatest learning algorithms not yet invented.

In light of this, I would encourage people in academia to consider Yahoo! in as fair a light as possible in the current circumstances. There are and will be some serious hard feelings about the outcome as various top researchers elsewhere in the organization feel compelled to look for jobs and leave. However, Yahoo! took a real gamble supporting a research organization about 7 years ago, and many positive things have come of this gamble from all perspectives. I expect almost all of the people leaving to eventually do quite well, and often even better.

What about ICML? My second thought on hearing about Prabhakar’s departure was “I really need to finish up initial paper/reviewer assignments today before dealing with this”. During the reviewing period where the program chair load is relatively light, Joelle handled nearly everything. My great distraction ended neatly in time to help with decisions at ICML. I considered all possibilities in accepting the job and was prepared to simply put aside a job search for some time if necessary, but the timing was surreally perfect. All signs so far point towards this ICML being an exceptional ICML, and I plan to do everything that I can to make that happen. The early registration deadline is May 13.

What about KDD? Deepak was sitting on an offer at Linkedin and simply took it, so the disruption there was even more minimal. Linkedin is a significant surprise winner in this affair.

What about Vowpal Wabbit? Amongst other things, VW is the ultrascale learning algorithm, not the kind of thing that you would want to put aside lightly. I negotiated to continue the project and succeeded. This surprised me greatly—Microsoft has made serious commitments to supporting open source in various ways and that commitment is what sealed the deal for me. In return, I would like to see Microsoft always at or beyond the cutting edge in machine learning technology.

added: crosspost on CACM.

added: Lance, Jennifer, NYTimes, Vader

This is a rather long post, detailing the ICML 2012 review process. The goal is to make the process more transparent, help authors understand how we came to a decision, and discuss the strengths and weaknesses of this process for future conference organizers.

Microsoft’s Conference Management Toolkit (CMT)

We chose to use CMT over other conference management software mainly because of its rich toolkit. The interface is sub-optimal (to say the least!) but it has extensive capabilities (to handle bids, author response, resubmissions, etc.), good import/export mechanisms (to process the data elsewhere), excellent technical support (to answer late night emails, add new functionalities). Overall, it was the right choice, although we hope a designer will look at that interface sometime soon!

Toronto Matching System (TMS)

TMS is now being used by many major conferences in our field (including NIPS and UAI). It is an automated system (developed by Laurent Charlin and Rich Zemel at U. Toronto) to match reviewers to papers, based on an analysis of each reviewer’s publications. TMS collects publications from reviewers, parses them into features and applies unsupervised or supervised learning techniques to predict the relevance of any target paper for any reviewer. We convinced TMS to integrate with CMT and funded Laurent’s work for that. Reviewers were asked to put in a publication list for TMS to parse. For those who failed to do so (after many reminders!), we manually added that information from public sources.

The Program Committee

Recruiting a program committee that is both large and highly qualified is difficult these days. We sent out 69 area chair invitations; 50 (highly qualified!) people accepted. Each of these area chairs was asked to nominate a list of potential reviewers. We sent out approximately 700 invitations for program committee members; 389 accepted. A number of additional PC members were recruited during the review process (most of them for 1-2 papers), for a total of 470 active PC members. In terms of seniority, the final PC contains about ~15% students, 80% researchers, 5% other.

The Surge (ICML + 50%)

The first big challenge came on the submission deadline. In the past few years, ICML had consistently received ~550-600 submissions. This year, we had a 50% increase, to 890 submissions. We had recruited a PC that could comfortably handle 700 papers. Dealing with an extra 200 papers was not an easy task.

About 10 submissions were rejected without review for various reasons (severe formatting issues, extra pages, non-anonymization).

Bidding

An unsupervised version of TMS was used to generate a list of candidate papers for each reviewer and area chair. This was done working closely with the Laurent Charlin of TMS using validation on previous NIPS data. CMT did not have the functionality to show a good list of candidate papers to reviewers, so we crafted an interface to show this list and let reviewers use that in conjunction with CMT. Ideally, this will be better incorporated in CMT in the future.

When you ask a group of scientists to run a conference, you must expect a few experiments will take place…. And so we decided to assess the usefulness of TMS scoring for generating lists of papers to bid on. To do this, we (randomly) assigned PC members to 1 of 3 groups. One group saw a list purely based on TMS scores. Another group received a list based on the matching between their subject area and that of the paper (referred to as the “relevance” score in CMT). The third group received a list based on a mix of both TMS and relevance. Reviewers were allowed to bid on any paper (excluding those with which they had a conflict); the lists were provided to help them efficiently sort through the large number of papers. We then compared the set of bids for a reviewer, with the list of suggestions, and measured the correspondence.

The following is the Discounted Cumulative Gain (DCG) of each list with respect to the bidding scores, averaged separately for each group. Note that each group was only presented with their corresponding list and not the others.

| Group: CMT | Group: TMS | Group: CMT+TMS | |

| Sorting by CMT scores | 6.11 out of 12.64 (48%) | 4.98 out of 13.63 (36%) | 4.87 out of 13.55 (35%) |

| Sorting by TMS score | 4.06 out of 12.64 (32%) | 6.43 out of 13.63 (47%) | 5.72 out of 13.55 (42%) |

| Sorting by TMS+CMT | 4.77 out of 12.64 (37%) | 6.11 out of 13.63 (44%) | 6.71 out of 13.55 (49%) |

A micro-survey was also run to collect further information on how users liked their short list. 85% of the participants indicated that they have used the list interface provided to them. The following is the preference indicated by each group (~75 reviewers in each group, ~2% error):

| CMT | TMS | CMT+TMS | |

| Preferred CMT over list | 15% | 12% | 8% |

| Preferred list+CMT | 81% | 83% | 83% |

| Preferred list over CMT | 4% | 5% | 9% |

It is obvious from the above that most participants found the list useful in conjunction with CMT (suggesting that the list should be integrated inside CMT). We can also see that those who were presented with a list based on TMS scores were more likely to find the list useful.

Note that all of the above was done in a long hectic but fun weekend.

Imputing Missing Bids

CMT assumes that the reviewers are not willing to review a paper unless stated otherwise. It does not differentiate between an unseen (but potentially relevant) paper and a paper that has been seen and ignored. This is a real shortcoming when it comes to matching papers to reviewers, especially for those reviewers that did not bid often. To mitigate this problem, we used the click information on the shortlist presented to the reviewers to find out which papers have been observed and ignored. We then impute these cases as real non-willing bids.

Around 30 reviewers did not provide any bids (and many had only a few). This is problematic because the tools used to do the actual reviewer-paper matching tend to assign the papers without any bids to the reviewers who did not bid, regardless of the match in expertise.

Once the bidding information was in and imputation was done, we now had to fill in the rest of the paper-reviewer bidding matrix to mitigate the problem with sparse bidders. This was done, once again, through TMS, but this time using a supervised learning approach.

Using supervised learning was more delicate than expected. To deal with the wildly varying number of bids per person, we imputed zero bids, first from papers that were plausibly skipped over, and if necessary at random from papers not bid on such that each person had the same expected bid in the dataset. From this dataset, we held out a random bid per person, and then trained to predict well the heldout bid. Most optimization approaches performed poorly due to the number of features greatly exceeding the number of labels. The best approach we found used the online algorithms in Vowpal Wabbit with a mass personalized training method similar to the one discussed here. This trained predictor was used to predict bid values for the full paper-reviewer bid matrix.

Automated Area Chair and First Reviewer Assignment

Once we had the imputed paper-reviewer bidding matrix, CMT was used to generate the actual match between papers and area chairs, and (separately) between papers and reviewers. Each paper had two area chairs (sometimes called “meta-reviewers” in CMT) assigned to it, one primary, one secondary, by running two rounds of assignments (so that the primary was usually the “better” match). One reviewer per paper was also assigned automatically by CMT in a similar fashion. CMT provides proper load balancing, so that all area chairs and reviewers had similar loads.

Manual Checks of the Automated Assignments

Before finalizing the automated assignment, we manually looked through the list of papers to fix any potential problems that were not handled by the automated process. The two major cases were papers that did not go through the TMS system (authors did not agree to do so), and cases of poor primary-secondary meta-reviewer pairs (when the two area chairs are judged to be too close to offer independent assessment, e.g. working at the same institution, previous supervisor-student relationship).

Second and Third Reviewer Assignment

Once the initial assignments were announced, we asked the two area chairs for a given paper to each manually assign another reviewer from the PC. To help area chairs with this, we generated a shortlist of 10 recommended reviewers for each paper (using the estimated bid matrix and TMS score, with the CMT matching algorithm for load balancing of reviewer suggestions.) Area chairs were free to either use this list, or select from the complete program committee, or alternately, they could seek an outside reviewer which was then added to the PC, an option used 80 times. The load for each reviewer was restricted to at most 7 papers with exceptions when they agreed explicitly to more.

The second and third uses of TMS, including the new supervised learning system, lead to another long hectic weekend with Laurent, Mahdi, Joelle, and John all deeply involved.

Reviews

Most papers received at least 3 full reviews in the first round. Reviewers could not see each others’ reviews until they submitted their own. ML-Journaled submissions (see double submission guide) were reviewed only by two area chairs. In a small number of regular submissions (less than 10), we received 2 very negative reviews and notified the third reviewer (who was usually late by this point!) that we would not need their review.

Authors’ Response

Authors were given a chance to respond to the reviews during a short feedback period. This is becoming a standard practice in machine learning conferences. Authors were also allowed to upload a new version of the paper. The motivation here is that in some cases, it is easier to show the changes directly in the paper, rather than discuss them separately.

Our analysis shows that authors’ responses and subsequent discussions by reviewers made significant changes to the scoring of papers. A total of ~35% of the papers had some change in their scores after the author feedback. The average score for ~50% of the papers went down, stayed the same for ~10%, and went up for the other ~40%. The variance on the scores decreased by ~20%, indicating some convergence in the decisions.

Final Decisions

To help us better decide on the quality of the papers, we asked the primary area chairs to provide a meta-review for each of their papers. For papers without unanimous review decisions (i.e. some reviews wanted to accept and some wanted to reject), we asked the secondary area chair to (independently) fill-in a meta-review, recommending whether to accept or reject the paper. A total of 1214 meta-reviews were provided. There were also 20 papers for which a 4th review was added in this period.

In all cases where the primary and secondary area chairs disagreed on the decision, the program chairs were directly involved, reviewing all the evidence (reviews, rebuttal, discussion, often the paper itself), and entering in a discussion (usually via email) with the area chairs, until a unanimous decision was achieved.

A total of 243 papers (27% of submissions) were accepted. Author notifications were sent out on April 30.