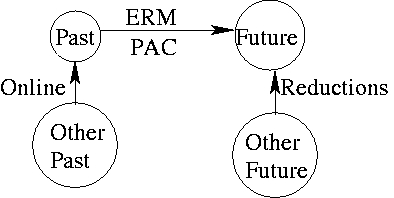

One of the most confusing things about understanding learning theory is the vast array of differing assumptions. Some critical thought about which of these assumptions are reasonable for real-world problems may be useful.

Before we even start thinking about assumptions, it’s important to realize that the word has multiple meanings. The meaning used here is “assumption = axiom” (i.e. something you can not verify).

| Assumption | Reasonable? | Which analysis? | Example/notes |

| Independent and Identically Distributed Data | Sometimes | PAC,ERM,Prediction bounds,statistics | The KDD cup 2004 physics dataset is plausibly IID data. There are a number of situations which are “almost IID” in the sense that IID analysis results in correct intuitions. Unreasonable in adversarial situations (stock market, war, etc…) |

| Independently Distributed Data | More than IID, but still only sometimes | online->batch conversion | Losing “identical” can be helpful in situations where you have a cyclic process generating data. |

| Finite exchangeability (FEX) | Sometimes reasonable | as for IID | There are a good number of situations where there is a population we wish to classify, pay someone to classify a random subset, and then try to learn. |

| Input space uniform on a sphere | No. | PAC, active learning | I’ve never observed this in practice. |

| Functional form: “or” of variables, decision list, “and” of variables | Sometimes reasonable | PAC analysis | There are often at least OK functions of this form that make good predictions |

| No Noise | Rarely reasonable | PAC, ERM | Most learning problems appear to be of the form where the correct prediction given the inputs is fundamentally ambiguous. |

| Functional form: Monotonic on variables | Often | PAC-style | Many natural problems seem to have behavior monotonic in their input variables. |

| Functional form: xor | Occasionally | PAC | I was suprised to observe this. |

| Fast Mixing | Sometimes | RL | Interactive processes often fail to mix, ever, because entropy always increases. |

| Known optimal state distribution | Sometimes | RL | Sometimes humans know what is going on, and sometimes not. |

| Small approximation error everywhere | Rarely | RL | Approximate policy iteration is known for sometimes behaving oddly. |

If anyone particularly agrees or disagrees with the reasonableness of these assumptions, I’m quite interested.