Attempts to abstract and study machine learning are within some given framework or mathematical model. It turns out that all of these models are significantly flawed for the purpose of studying machine learning. I’ve created a table (below) outlining the major flaws in some common models of machine learning.

The point here is not simply “woe unto us”. There are several implications which seem important.

Here is a summary what is wrong with various frameworks for learning. To avoid being entirely negative, I added a column about what’s right as well.

| Name |

Methodology |

What’s right |

What’s wrong |

| Bayesian Learning |

You specify a prior probability distribution over data-makers, P(datamaker) then use Bayes law to find a posterior P(datamaker|x). True Bayesians integrate over the posterior to make predictions while many simply use the world with largest posterior directly. |

Handles the small data limit. Very flexible. Interpolates to engineering. |

- Information theoretically problematic. Explicitly specifying a reasonable prior is often hard.

- Computationally difficult problems are commonly encountered.

- Human intensive. Partly due to the difficulties above and partly because “first specify a prior” is built into framework this approach is not very automatable.

|

| Graphical/generative Models |

Sometimes Bayesian and sometimes not. Data-makers are typically assumed to be IID samples of fixed or varying length data. Data-makers are represented graphically with conditional independencies encoded in the graph. For some graphs, fast algorithms for making (or approximately making) predictions exist. |

Relative to pure Bayesian systems, this approach is sometimes computationally tractable. More importantly, the graph language is natural, which aids prior elicitation. |

- Often (still) fails to fix problems with the Bayesian approach.

- In real world applications, true conditional independence is rare, and results degrade rapidly with systematic misspecification of conditional independence.

|

| Convex Loss Optimization |

Specify a loss function related to the world-imposed loss fucntion which is convex on some parametric predictive system. Optimize the parametric predictive system to find the global optima. |

Mathematically clean solutions where computational tractability is partly taken into account. Relatively automatable. |

- The temptation to forget that the world imposes nonconvex loss functions is sometimes overwhelming, and the mismatch is always dangerous.

- Limited models. Although switching to a convex loss means that some optimizations become convex, optimization on representations which aren’t single layer linear combinations is often difficult.

|

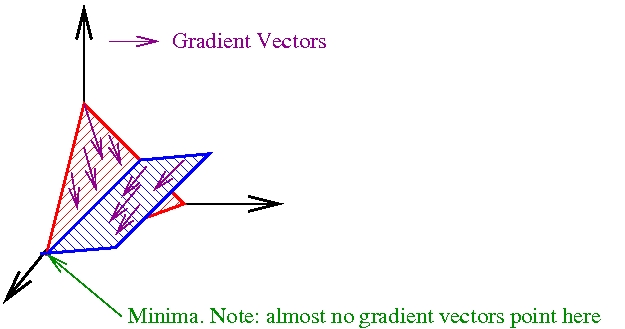

| Gradient Descent |

Specify an architecture with free parameters and use gradient descent with respect to data to tune the parameters. |

Relatively computationally tractable due to (a) modularity of gradient descent (b) directly optimizing the quantity you want to predict. |

- Finicky. There are issues with paremeter initialization, step size, and representation. It helps a great deal to have accumulated experience using this sort of system and there is little theoretical guidance.

- Overfitting is a significant issue.

|

| Kernel-based learning |

You chose a kernel K(x,x’) between datapoints that satisfies certain conditions, and then use it as a measure of similarity when learning. |

People often find the specification of a similarity function between objects a natural way to incorporate prior information for machine learning problems. Algorithms (like SVMs) for training are reasonably practical—O(n2) for instance. |

Specification of the kernel is not easy for some applications (this is another example of prior elicitation). O(n2) is not efficient enough when there is much data. |

| Boosting |

You create a learning algorithm that may be imperfect but which has some predictive edge, then apply it repeatedly in various ways to make a final predictor. |

A focus on getting something that works quickly is natural. This approach is relatively automated and (hence) easy to apply for beginners. |

The boosting framework tells you nothing about how to build that initial algorithm. The weak learning assumption becomes violated at some point in the iterative process. |

| Online Learning with Experts |

You make many base predictors and then a master algorithm automatically switches between the use of these predictors so as to minimize regret. |

This is an effective automated method to extract performance from a pool of predictors. |

Computational intractability can be a problem. This approach lives and dies on the effectiveness of the experts, but it provides little or no guidance in their construction. |

| Learning Reductions |

You solve complex machine learning problems by reducing them to well-studied base problems in a robust manner. |

The reductions approach can yield highly automated learning algorithms. |

The existence of an algorithm satisfying reduction guarantees is not sufficient to guarantee success. Reductions tell you little or nothing about the design of the base learning algorithm. |

| PAC Learning |

You assume that samples are drawn IID from an unknown distribution D. You think of learning as finding a near-best hypothesis amongst a given set of hypotheses in a computationally tractable manner. |

The focus on computation is pretty right-headed, because we are ultimately limited by what we can compute. |

There are not many substantial positive results, particularly when D is noisy. Data isn’t IID in practice anyways. |

| Statistical Learning Theory |

You assume that samples are drawn IID from an unknown distribution D. You think of learning as figuring out the number of samples required to distinguish a near-best hypothesis from a set of hypotheses. |

There are substantially more positive results than for PAC Learning, and there are a few examples of practical algorithms directly motivated by this analysis. |

The data is not IID. Ignorance of computational difficulties often results in difficulty of application. More importantly, the bounds are often loose (sometimes to the point of vacuousness). |

| Decision tree learning |

Learning is a process of cutting up the input space and assigning predictions to pieces of the space. |

Decision tree algorithms are well automated and can be quite fast. |

There are learning problems which can not be solved by decision trees, but which are solvable. It’s common to find that other approaches give you a bit more performance. A theoretical grounding for many choices in these algorithms is lacking. |

| Algorithmic complexity |

Learning is about finding a program which correctly predicts the outputs given the inputs. |

Any reasonable problem is learnable with a number of samples related to the description length of the program. |

The theory literally suggests solving halting problems to solve machine learning. |

| RL, MDP learning |

Learning is about finding and acting according to a near optimal policy in an unknown Markov Decision Process. |

We can learn and act with an amount of summed regret related to O(SA) where S is the number of states and A is the number of actions per state. |

Has anyone counted the number of states in real world problems? We can’t afford to wait that long. Discretizing the states creates a POMDP (see below). In the real world, we often have to deal with a POMDP anyways. |

| RL, POMDP learning |

Learning is about finding and acting according to a near optimaly policy in a Partially Observed Markov Decision Process |

In a sense, we’ve made no assumptions, so algorithms have wide applicability. |

All known algorithms scale badly with the number of hidden states. |

This set is incomplete of course, but it forms a starting point for understanding what’s out there. (Please fill in the what/pro/con of anything I missed.)